An inside look at Clearbit’s ICP and lead scoring model

How Clearbit Uses Clearbit: This series shares how we use Clearbit products for acquisition, conversion, and operations.

How Clearbit Uses Clearbit: This series shares how we use Clearbit products for acquisition, conversion, and operations.

When I joined Clearbit’s sales team in 2016, we were a young startup eager to talk with any lead that expressed interest in us. Our sales team was small, but we were able to follow up with all of our inbound traffic, ticking our way through lead lists when we had time. Ah, the simple days.

Our lead volume has skyrocketed over the last couple of years, but our sales team hasn’t grown by much. In the last three years, we’ve hired only a few additional people, like our first SDRs a few months ago. Meanwhile, our monthly qualified lead volume has increased by 2.4x. This means we still have a pretty healthy lead/rep ratio. We realized, as many growing startups do, that we not only needed a good way to prioritize leads — so that we were paying attention to the most valuable leads — but we also needed to automate the way we processed such a large volume of leads so that we didn’t have to do it by hand.

So we used our own Clearbit data to create an ideal customer profile (ICP), and then developed a lead scoring model in Salesforce to automatically prioritize leads for us. These models let us identify promising users we may have missed in the crowd and helped us route great leads to the right AEs and SDRs.

I’ll share how we set up these models — but first, a picture of the situation we found ourselves.

Or jump ahead to:

The squeakiest wheel shouldn’t always get the grease

Before we developed a lead scoring system, our sales team would triage “hand raises” — leads who had filled out a form requesting to talk to sales — before any other inbound leads.

“Hand raises” are the leads who fill out a form asking to talk to sales.

The problem was that hand raises often came from understaffed startups who needed one of our reps to explain Clearbit’s value, since they didn’t have the resources to do in-depth research themselves. They frequently didn’t have the budget to buy our products either. But they got our full attention every time.

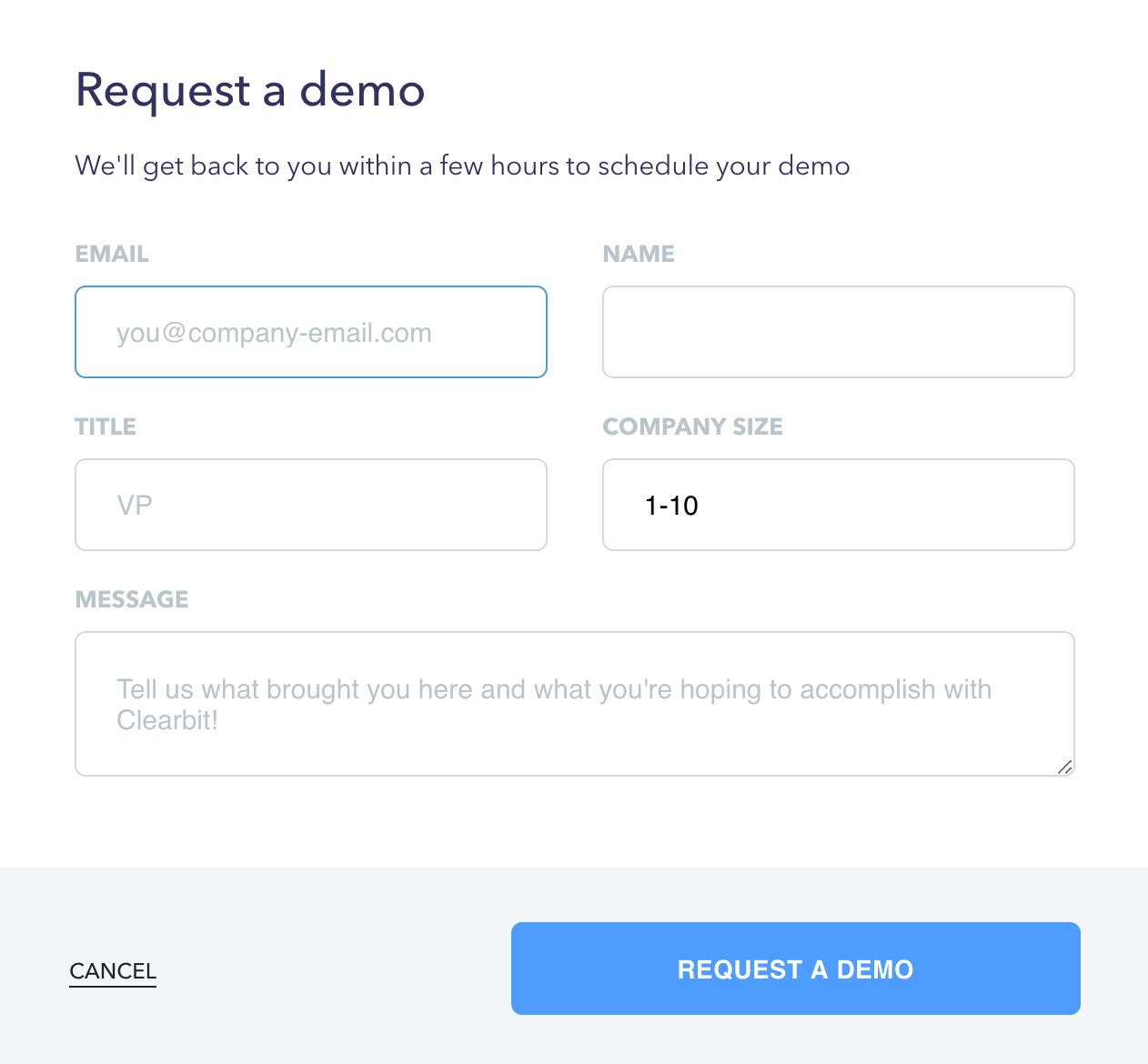

Meanwhile, we had another stream of inbound leads: we had a low-friction, low-risk signup form for self-serve free trial, and free consumer products that got a lot of brand recognition and traffic. Put together, our number of conversions ballooned.

Is signing up for Clearbit’s trial a bit too easy?

Is signing up for Clearbit’s trial a bit too easy?Most of those conversions weren’t productive as long-term Clearbit customers, but some of them turned out to be very valuable. There were companies quietly signing up who had the resources and budget to support their own research. They were exactly who we should have been working with, but they flew under the radar.

So as we grew, no one was getting the attention they deserved, and our AEs needed a fair system that gave them good at-bats instead of letting them spend too much time with the wrong people.

The little bit of lead qualification we’d been doing wasn’t doing the job. We'd been running a simple Salesforce report on our inbound leads in order to reduce the list volume. We enriched the records with Clearbit data, then filtered for companies that had over 20 employees and were based in the U.S. While this helped focus our attention for a while, we knew we needed more rigorous, data-driven prioritization as we grew. And besides, we’d chosen those two attributes purely on gut feeling.

We decided to expand our list of filter attributes and prioritize leads based on how similar they were to our ICP. The problem was, we weren’t sure what our ICP looked like, exactly. Here’s how we found out.

Modeling our ideal customer profile

In modeling our ideal customer profile, we wanted to discover the indicators that a company would be a good customer:

- Are our ideal customers SaaS companies? Companies with substantial web traffic (high-ranking Alexa scores)?

- How much funding have they raised, if they’re private?

- What industry are they from?

- What sales and marketing technologies do they already use?

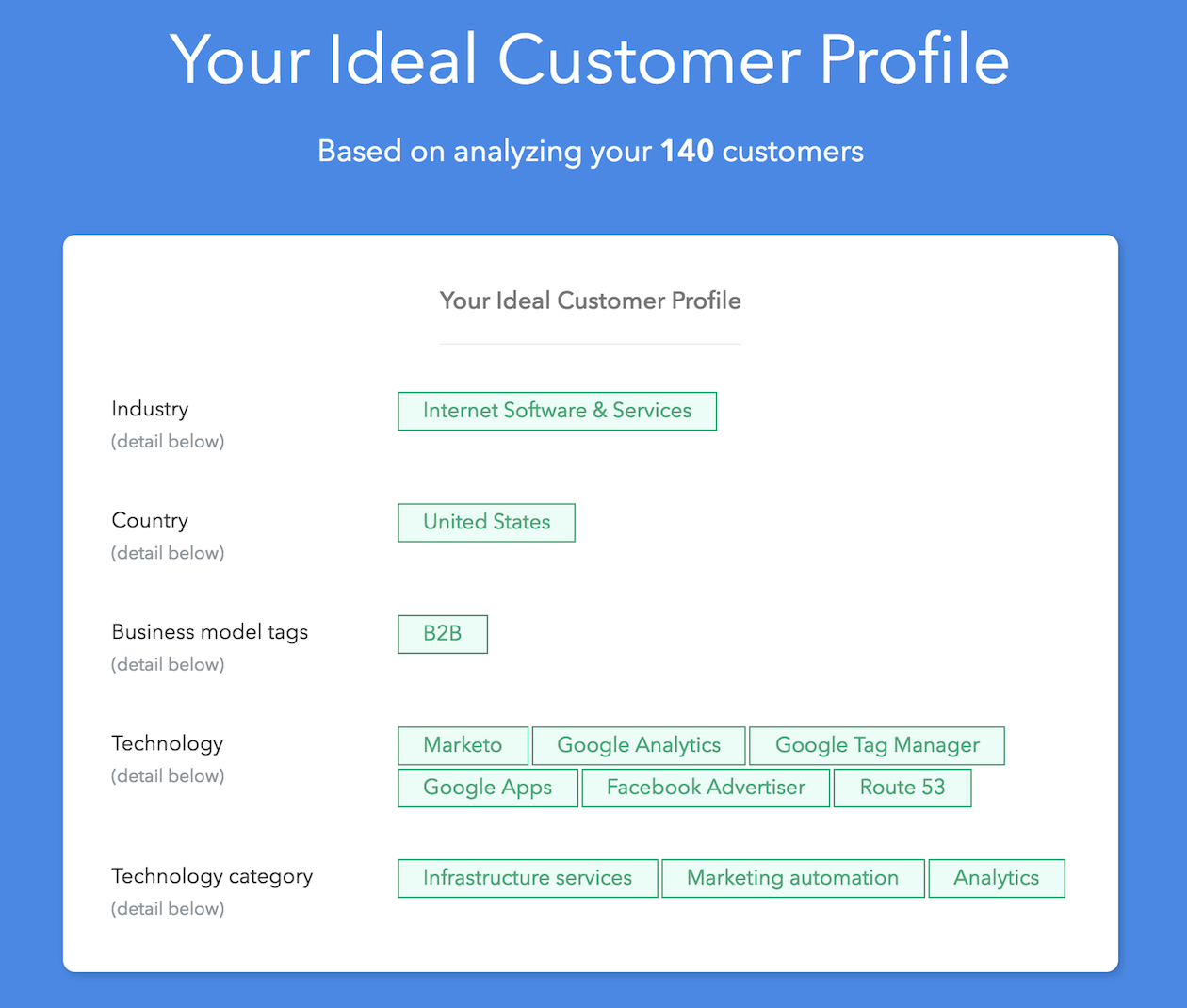

We based the ICP model on our current customers. We made a shortlist of our best Closed/Won opportunities in Salesforce, then enriched their records with Clearbit data.

Technically, there were many possible attributes of our ICP (Clearbit Enrichment supplies 100+ data points), but we mainly focused on:

- industry

- business model tags (SaaS, B2B, etc.)

- technology used (Salesforce, Marketo, Drift, etc.)

- estimated annual revenue

- employee range

- country

We then calculated the “ideal” profile by picking the attributes that more than 50% of all customers shared. That’s a pretty simplistic formula, but it can still reveal a lot of great insights.

The output was a CSV file pinpointing the specific characteristics that predicted customer success, to which we could then compare new leads. Here’s an example ICP result:

This ICP shows that an ideal customer is likely to be a B2B SaaS company in the US that has sophistication in marketing, automation, and analytics software

Now we could take an inbound lead and programmatically compare it to this ICP profile to give it a score.

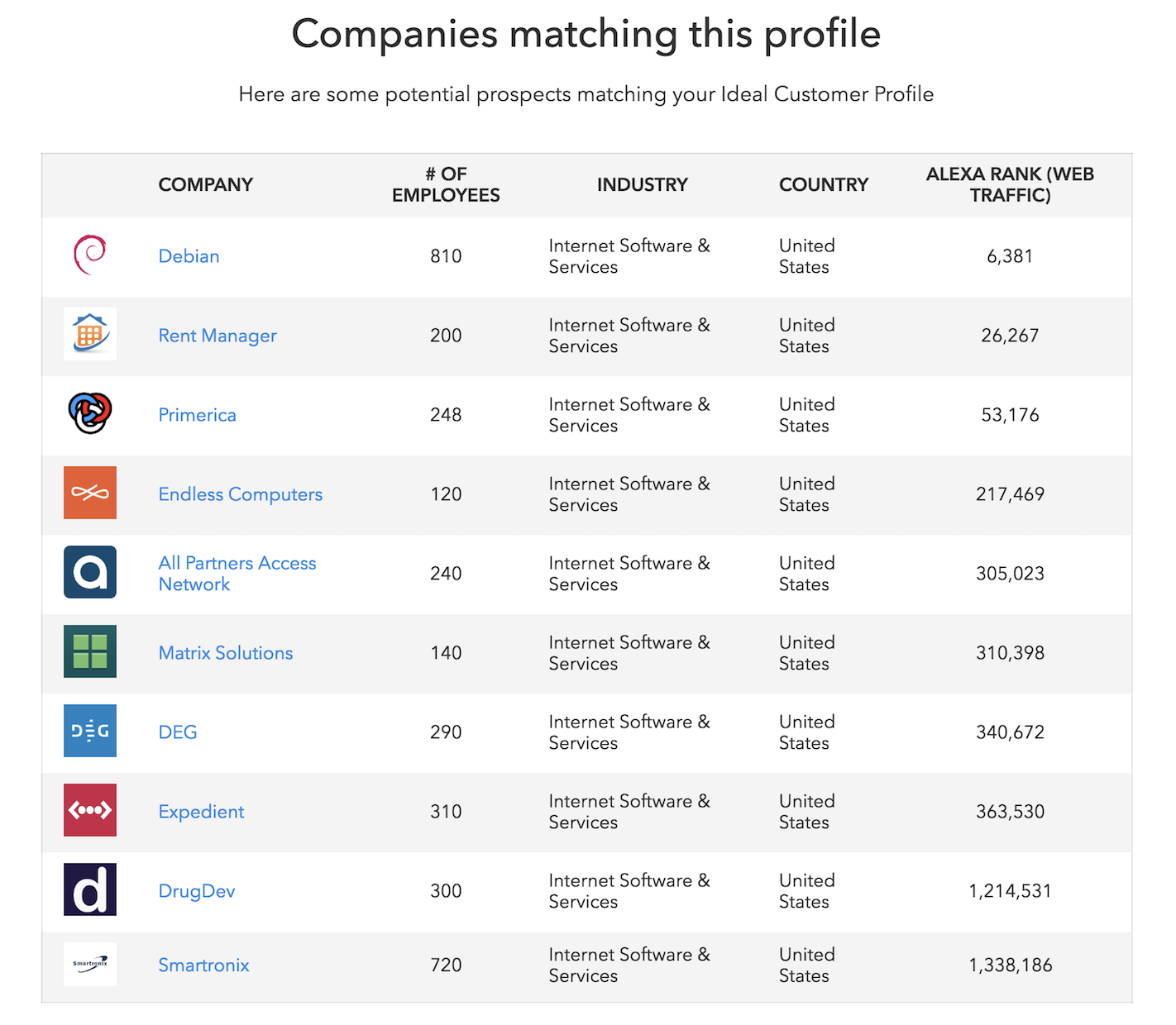

We could also use the ICP for outbound by proactively searching Clearbit’s database for companies that looked similar. Here’s an example:

Next, we built the automated lead scoring model.

Building our lead scoring model in Salesforce

Once we’d defined an ICP and its attributes, we built a model in Salesforce to score new leads based on how well they matched our target buyer.

The ICP isn't the only data we take into account for lead scoring. We focus on three kinds of criteria. The first group compares the lead to the ICP, the second looks at the individual person who initiated the trial signup, and the third considers responses from a short survey filled out during signup.

-

Firmographic (ICP)

Does the company look like a good Clearbit customer? We look at whether they’re B2B, their employee count, location, and the technology they use. -

Person data (the individual)

We typically want to have conversations with individuals who are in sales or marketing, and ideally they’re in a leadership or purchasing/decision-making role. -

Questionnaire answers

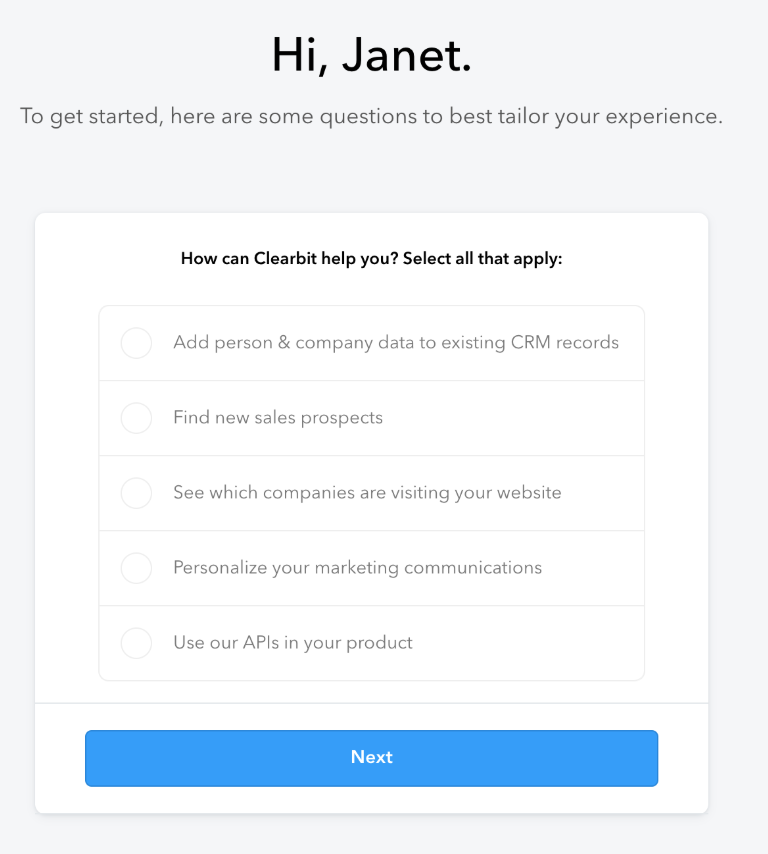

When someone signs up for Clearbit, we ask them to answer two questions about what they think they’d need Clearbit for. If they select the use cases where we’re most differentiated from our competitors, that’s a signal that we’d be a good fit and more likely to close a deal.

Our lead scoring model factors in answers to survey questions posed to new leads who sign up for a Clearbit account.

Our lead scoring model factors in answers to survey questions posed to new leads who sign up for a Clearbit account.After the lead scoring model analyzes the prospect’s Clearbit data and survey questions, it buckets the leads into A, B, and F groups to show how well a lead matches up. (It also creates a numerical score, but these three buckets keep it simple for our everyday use.)

- A Class Leads: These leads fit with our ICP, and also have ideal questionnaire answers.

- B Class Leads: These fit with our ICP, but don’t select our ideal use cases in the questionnaire.

- F Class Leads: Everyone else.

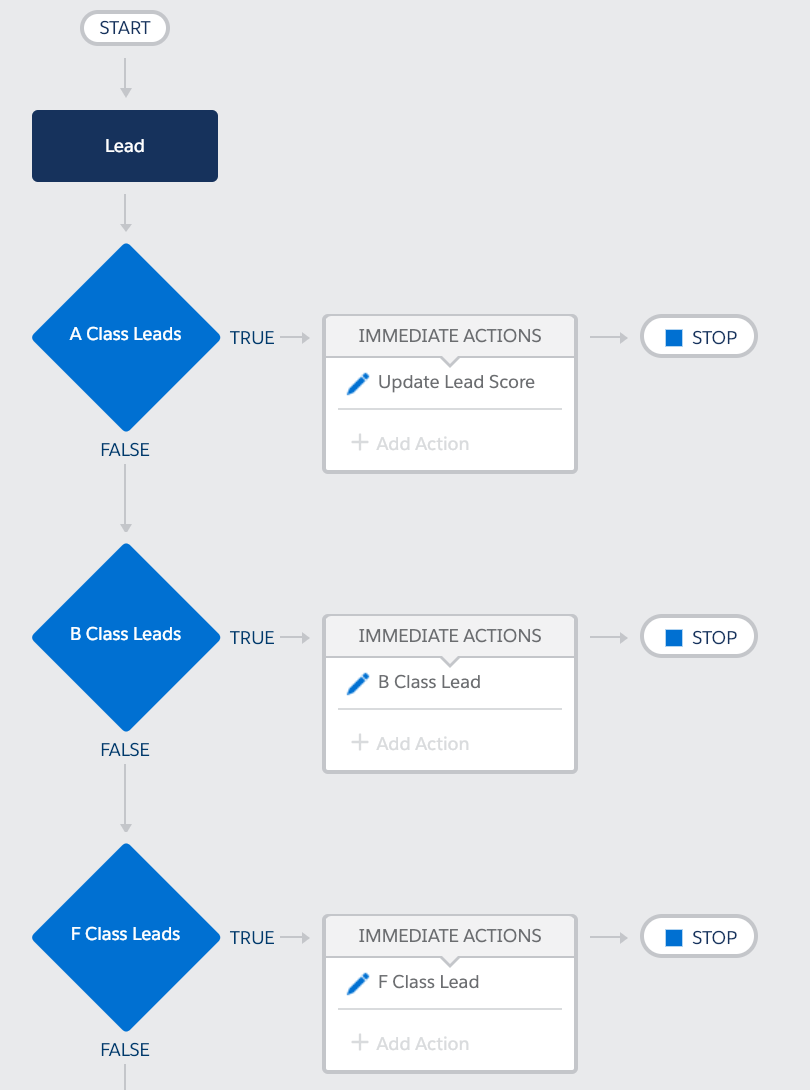

We built the model in Salesforce’s Process Builder. Process Builder is a point-and-click tool you can use to automate workflows in Salesforce using if-then statements. The ability to use Clearbit-enriched data when defining conditions makes the Process Builder great for lead scoring and routing, as many of our customers also do. (It's actually a little more complicated to use Process Builder than to just custom-code the model, but it gives us a nice graphical UI so that anyone on the team can visualize our lead scoring process and alter the model without needing to code.)

The screenshot below of the workflow in Process Builder shows how a new inbound lead progresses through qualification. The model first checks whether the lead qualifies as an A Class lead. If not, it checks whether it qualifies as a B. If not, it’s classified as F.

The image below shows how we define A Class. First, we specified 24 data points, or conditions, as the attributes we judge a lead on — such as, “they use Marketo,” “they have offices in the US,” and “this person has a Director title.” Below the condition list is a "Logic" section. This is what we programmed in by hand: a long string of if-then statements using the conditions defined above to qualify the lead.

Salesforce Process Builder being used for Class A lead qualification [full version image]

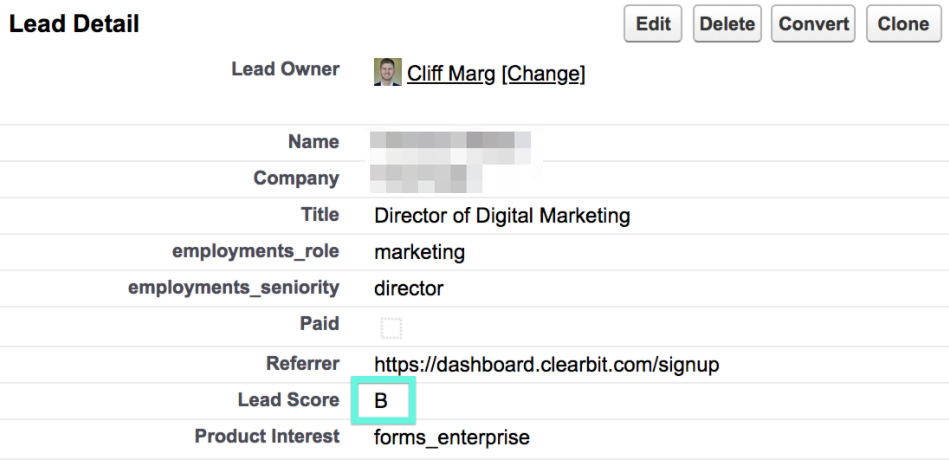

Salesforce Process Builder being used for Class A lead qualification [full version image]Once a lead is bucketed into the right A/B/F Class, the score shows up on its Visualforce page so that AEs and SDRs can see it immediately when they're scanning through.

We also route and assign leads to either AEs or SDRs based on the score. AEs typically deal with As and Bs, while SDRs vet Fs before initiating any type of personalized outreach.

This automated classification allows us to get into the sales cycles earlier with the most important folks.

If you’re just getting started with lead qualification, it’s okay to keep it simple and run a Salesforce report using a few criteria based on who you think your best opportunities are. Data points like employee count, geo location, and standardized role/seniority groupings title are probably enough to get started with. You can always add more complexity over time.

I see a lot of companies qualifying with just one data point, so if that’s you, think about what you can add to make the qualification more useful. Our first approach was a simple two-criteria report, but we’ve come a long way, and still have more to go.

A great place for us to take lead scoring next might be to incorporate behavioral data about how users are engaging with our free products. If they’re actively using our free products or maxing out usage quotas every month, that signals familiarity and the fact that they’re getting consistent value. Our lead score could reflect that intent and help us expand accounts that are ready for it.

Another data point to add to lead scoring could be specific types of website engagement. We can use Clearbit Reveal to identify site visitors and increase the lead score for the ones who visit high-purchase-intent pages, like our pricing page, API docs, and setup docs.

Essentially, we’ve learned that the profile of our “ideal” customer is a dynamic guide, not a stone statue. We were, and still are, a growing startup, and if our focus shifts every few months, so does our ICP. Regardless, we can bring in so much more data into our decision making.

I hope this look at how we wove Clearbit data into our sales process was helpful, and if you want to see more, check out how my teammate Cliff speeds up lead research as an AE.